Basics: Calibration and Accuracy

Contents

Introduction

Do you trust in the values from your sensors? You shouldn't! There are many ways values can go wrong. We will cover two chapters in this "Basics": Tolerances and how to calibrate a sensor, if possible.

How much accuracy do i need?

Well, most people would say "As much as possible". And that's nonsense, that simple.

If you want to report weather data to a official network you should be accurate for sure. On another note, if you want to get a comfy temperature, you will set the desired value on your home control a bit higher or lower, that's it. Comfort is not reading thermometers, it's just feeling good. It does not make any sense if you read temperature in the living room with 0.01°C accuracy.

The golden rule of metering: Do not meter as precise as possible but as precise as needed.

An Example: You're using a digital voltmeter to check mains voltage. It is of absolutely no interest, if the voltage is 231.4V or 232.2V! It gets interesting if your multi-meter shows around 230V or 150V. But your multimeter can show a 1/10V digit... nice. Get over it: It is useless for that purpose.

Tolerances

Every sensor has tolerances. High priced precision sensors are not tolerance free - they just have lower tolerances! Where do these tolerances come from?

Tolerances by Manufacturing

The manufacturing tolerance of any sensor has a lot of reasons.

This starts with the basic chemicals the semiconductor itself is made of. Semiconductors are made of substances of extreme purity. Literally every molecule of "dirt" in this substances changes the characteristics of the semiconductor a bit. As nothing is really 100% clean, every sensor will differ a bit. Then these sensors are produced and assembled on machines. These machines have a production tolerance. All this summarizes up to the tolerance of your sensor. Every sensor has individual tolerances.

What can you do against these tolerances?

For short: Nothing! They are there, they exist. Period. You can minimize them by buying close-tolerance types... you will note quickly that this is an expensive thing to do. Calibrating is a way out.

Tolerances by Environment

The biggest influence from environment is temperature. Semiconductors usually are highly sensitive to temperatures so a sensor may vary with the temperatures around. Most modern sensors have a temperature compensation so this factor is negligible.

Moisture can influence the values of your sensor too. As even pure water is a bit conductive it can change electrical values. Together with oxygen from air it might be corrosive. This is of interest when using outside sensors in airtight cases.

Dust is an underestimated factor. It might blind your luminosity sensors, or it might clog filter membranes of a humidity sensor. It settles everywhere over time and may even mislead a dust sensor.

In the broader sense even things like mains voltage fluctuation and quality of power supplies are environmental factors... these are the environment for the sensor.

Tolerances by Aging

Like you and me sensors get older..... Over time temperature fluctuation, corrosion and other factors leave some traces. Even just using them can change the characteristics of some parts. Dust sensors use a LED or laser diode for example - these diodes loose luminosity over time.

Tolerances by A/D-Conversion

Sensors themselves usually give analog values. For the ESP-Chip we want digital values, if possible temperature compensated and/or calculated to a useful value. So the analog value must get converted into bits and bytes. This A/D-conversion adds a tolerance of usually +/- 1...2 digits (last digit may vary by +/- 1..2 points.)

Which accuracy does my sensor have?

Your first source for accuracy values is the datasheet of the manufacturer. We listed some usual sensors here, but most datasheets give far more information.

Be aware: Quality costs money, you get what you pay for. A cheap temperature sensor DS18B20 from china will usually work. But DS18B20 from Dallas or Maxim are playing in another league by price, quality and accuracy. If you buy cheap ones you should expect bigger tolerances.

| Name | Sensor | Tolerance | Unit | Extra information |

|---|---|---|---|---|

| BME280 | Pressure | ±0.12 | hPa | relative |

| ±1 | hPa | absolute | ||

| Temperature | ±0.5 | °C | @25°C | |

| Humidity | ±3 | %rH | 20%rH-28%rH | |

| DS18B20 | Temperature | ±0.5 | °C | -10°C-+85°C |

| DHT-22 | Temperature | ±0.2 | °C | |

| Humidity | ±5 | %rH | ||

| SHT-10 | Temperature | ±0.5 | °C | |

| Humidity | ±4.5 | %rH | ||

| SHT-11 | Temperature | ±0.4 | °C | |

| Humidity | ±3 | %rH | ||

| SHT-15 | Temperature | ±0.3 | °C | |

| Humidity | ±2 | %rH |

Calibrating

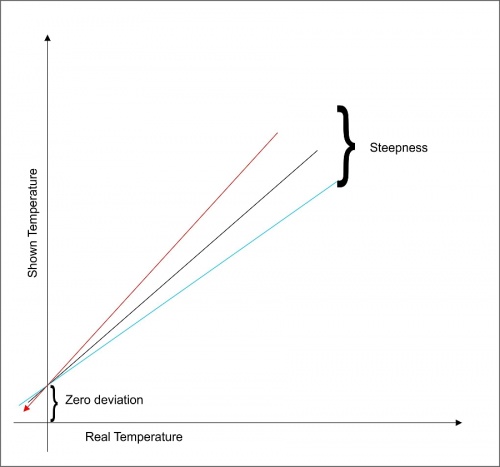

What is calibration? It is a way to make a imperfect sensor give better values. It needs a bit of math, but it don't need witchcraft or rocket science. The way to reach this goal is understanding two basic terms: zero point deviation and the steepness.

Zero point deviation

An example: If you put your temperature sensor on exactly 0°C, it should show 0°C. Most wont do that, they will show some deviation. This has to be corrected for exact metering by adding/subtracting a value to get 0°C.

Steepness

Now we put the temperature sensor into another temperature, let's say 25°C. Now your sensor should show 25°C, but usually it will show a slightly different value. This can be corrected by multiplication with a correction factor.

How to calibrate?

This depends on the sensor you use and if you have a reference of good accuracy. Let's take the example from above for demonstration. It shows already the way of calibration. Let's say you have a waterproof DS18B20 temperature sensor, it's the easiest to calibrate. You need a thermos jug, some ice from the fridge, water and a good thermometer.

First step: Correcting the zero point

Melting ice has a temperature of 0°C - it's the easiest reference you can get. Fill the thermos jug half with water and put some ice cubes into it, put your sensor into the ice/water mix and wait - at least 15...30 minutes. Just stir or shake the jug now and then to avoid different layers of temperature. Now note the temperature you read from the sensor. Let's say it is 0.3°C for an example. Edit the formula field inside ESP Easy to:

%value% -0.3

to compensate the +0.3°C reading at zero. It should show 0°C now.

Second step: Calibrating the steepness

Now empty out the thermos jug and fill in warm water , around 30°C..40°C is a good value. Put in the sensor and the thermometer. The long glass thermometers for chemical labs are useful for this purpose and they are not expensive. Again, wait at least 15 minutes - settling the sensor takes a while. Note the readings of the sensor and the thermometer. Let's say thermometer shows 35°C and the sensor shows 34.7°C. The correction factor is a simple calculation now:

35/34.7 = 1.0294

Change the above formula into

(%value% -0.3) * 1.0294

and submit.

You're done! Your sensor is now calibrated.

Limits

Yes, this method has it's limits. First, you need a really exact reference. Technically you need a calibrated thermometer which is expensive. More, it would need a pump to circulate water permanently to homogenize the temperature in the bottle. Worse, the pump has to be outside the bottle as the motor gets warm and influences the temperature...

Another point: This method works really well only on completely linear sensors. Digital sensors usually are linearized internally so this is not a too big problem. It gets into a problem with analog sensors like the GP2Y10 dust sensor. When it gets near it's limits it is somewhat non-linear. Anyways, the method is good enough to give some calibration to usual sensors. So cheap sensors can be used with good results.

But always remember: If you want to calibrate really exact to a 0.1°C accuracy you need a reference of 0.01°C accuracy! These are not available for affordable prices, they cost a fortune.

So at the end calibration possibilities are limited.

Hints, tips, tricks

Other sensors.... well, everything depends on getting a good reference. Calibrating zero point deviation of a luminosity sensor is easy - just put it into a completely dark box. Calibrating the steepness can be done same way as temperature - if you have a professional luminosity meter with good calibration. Remember that the sensor of that luminosity meter may behave different to your sensor. It might give different values with daylight and artificial light.

What if your sensor is not waterproof and you want to calibrate temperature? Use a waterproof plastic bag, even a cleaned and dried condom works well. :)

The dual point calibration works roughly for every sensor - some improvisation is needed.

It is more difficult to calibrate sensors without a zero point. Air pressure is a good example - you won't get the sensor into a 100% vacuum. Even if you could do so, the sensor usually is off limits then so it's not usable. What can you do? You need some math for that. You will have to take several values from the sensor and a reference meter and calculate the formula values. To be honest - it does not pay. The differences are not that big with nowadays sensors. It is far more important to set the correct high position within ESP Easy to get real results.

Dust sensors are somewhat similar to that. As you will never get a "clean room" without any dust it is not really possible to get a precise zero deviation. An acceptable approximation is to protocol the values and take the lowest value over a longer time as zero point.

Some thoughts about decimals and accuracy

ESP Easy usually offers 2 decimals as default. Let's again take the DS18B20 as example. It has a tolerance of +/- 0.5°C If you have two DS18B20, tolerance might sum up to 2°C. So if you read 24.12°C and 24.94°C, which one is right? To be exact - none of them. Two decimals with 0.5°C tolerance is nonsense, that simple. The first one might really have 24.12 +/- 0.5 = 23.62°C .... 24.62°C. The second one is 24.44°C .... 25.44°C. As you see they both might have 24.5°C in real... what are two decimals worth here?

For near real results the sensors have to be calibrated. But please realize that even a calibrated reference thermometer still has a tolerance, just a smaller one. So if you calibrate your temperature sensors with a really accurate calibrated thermometer as a reference you might use one decimal reasonably.

Where are more decimals useful?

If you use a pulse counter it might be good to use 2 or 3 decimals. Let's say you have a electricity counter that gives 2 pulses per watt. If you're counting the pulses directly, decimals are useless. There are no "half pulses". To get your kW consumption you will use a formula %value% / 2000. Here 3 decimals are meaningful as the pulse counter is really that exact.